Ethics, Design and Cybersecurity

Bella JonesConnections Behind Dark UX Patterns and Social Engineering Tactics

If you work in an organization, you’ve probably had to take a cybersecurity training course at some point during your time there. Regardless of whether you work in cybersecurity or not, most of us breeze through the slides or videos, halfway listening to the warnings about spear phishing emails and hacking tactics. We complete the training and then we tuck away the lessons learned until the next year when we have to do it all again. But what if we were to take those cybersecurity safety lessons and apply them to the world of software/interface design?

As a Senior User Experience (UX) Designer at ThreatQuotient, I have one foot in the user experience design world and the other in the cybersecurity world. As a designer, I naturally interpret the world around me through the lens of psychology and cognitive behavior. When I entered the world of cybersecurity, the more I learned about concepts such as social engineering, the more I started making connections between the social engineering tactics used by attackers and the dark patterns used by some UX designers to manipulate user behavior.

Before diving into why the connection between these two concepts is significant, let’s talk about what social engineering is and why it’s important in this context. Social engineering is a method of exploitation used by cyber attackers to gain access to sensitive data or systems via human factors. What this means is that rather than preying on technical gaps in an organization’s networks and hardware, these attackers prey on the weaknesses of the people operating within an organization.

“All of the firewalls and encryption in the world can’t stop a gifted social engineer from rifling through a corporate database.

If an attacker wants to break into a system, the most effective approach is to try to exploit the weakest link—not operating systems, firewalls or encryption algorithms—but people.”

– KEVIN MITNICK, THE ART OF DECEPTION

The types of vulnerabilities that these attackers target are psychological in nature. They could employ tactics such as exploiting a person’s propensity to defer to authority or name dropping a familiar name from the target’s personal or professional network. They could use intimidation tactics by imposing a false deadline or threaten to pressure the target into compliance with their authoritative demands. These strategies are preying on the existing weaknesses that are innate to our human psychology and cognitive patterns.

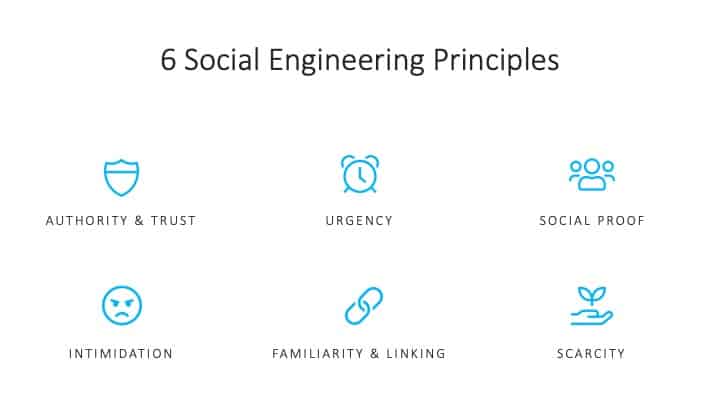

A social engineer might use a combination of multiple aspects of these psychological vulnerabilities to their advantage. For example, a social engineering attacker might begin by posing as a trustworthy colleague in the IT department, close enough to be familiar within the organization, but far enough to remain unquestioned. This person might make a phone call that would mirror a typical type of call someone like this would make to an employee, perhaps pretending to respond to an IT request that was actually made or referencing another known colleague that the target would be familiar with. Then as this social engineer builds trust with the target, they might use the authority of their fake IT position to bolster them to ask the target for sensitive information. They may use this authority and trust that they’ve built to pressure the victim into thinking the request is time sensitive. This type of ask can often be disguised as a simple, harmless request. However, by utilizing these various tactics including implied authority, trust-building, and creating urgency amongst the social engineering toolkit (see graphic below), the attacker can easily access sensitive information in a single conversation.

Figure 1: 6 social engineering principles

Understanding the basis of this hacking strategy is important, because it has characteristics that are eerily similar to another form of psychological hacking that exists in UX design, known as dark patterns. Dark patterns are manipulative design patterns used on websites and in digital products to trick users into doing an action or series of actions that they did not originally intend to do.

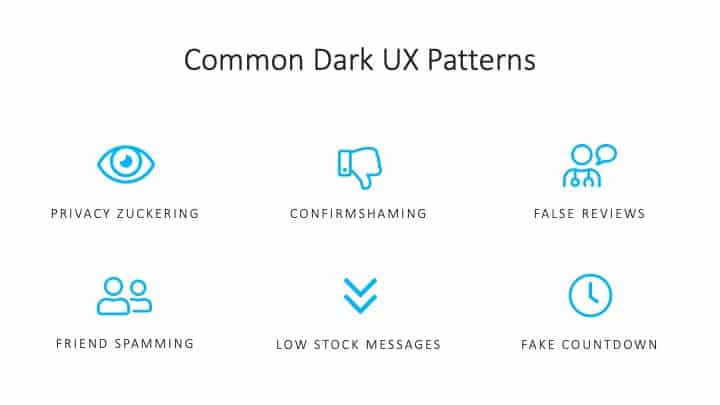

This concept has been a huge point of discussion in the UX and UI design world, as designers and practitioners have grappled with the realization that many of the patterns we’ve become accustomed to using can be exploited with dishonest design intentions. While we are usually aware of typical patterns and expectations for online behavior, there are times when we can find ourselves lost navigating a site or unintentionally subscribing to a service we do not actually want. These dark patterns often primarily benefit the profitability of a business either by gathering and selling data about a user, tricking users into subscriptions or purchases, or Inflating user click click-through metrics. The systematic naming and shaming of these ubiquitous practices have brought to light the work that is left to do for UX designers when it comes to creating not only delightful, but ethical interface designs.

“If a company wants to trick you into doing something, they can take advantage of it by making a page look like it is saying one thing when in fact it is saying another.”

– HARRY BRIGNULL, DARKPATTERNS.ORG

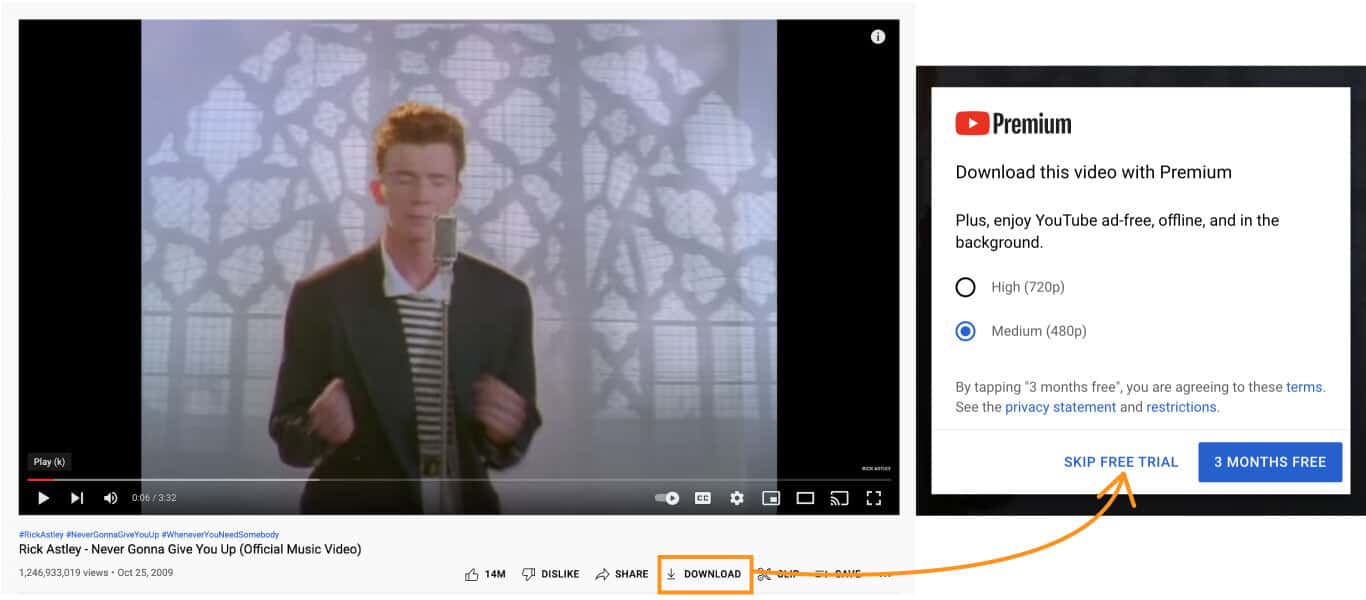

As users, we become accustomed to certain patterns when we repeatedly do similar workflows in the digital products we use day to day in our professional or personal lives. We don’t always read every word on a page, we heavily rely on patterns and assumptions to make decisions. For example, in UX design, there is a well understood pattern when it comes to the placement and styling of buttons. Buttons can either be primary or secondary, with primary buttons being styled more predominantly and corresponding to the intended action of the workflow and secondary buttons being presented near the primary button as a related action the user may want to take. Many of us implicitly know this pattern and use it every day.

Figure 2: YouTube Video Pattern

However, there are some sites that are designed to take advantage of this pattern that have been conditioned into users. In the example above, this modal is presented to the user when they click on the “Download” button of a video on YouTube. While the intended action was to simply download the video, the option presented as the primary button would actually subscribe the user to 3 months of YouTube Premium. A user that is accustomed to following a typical pattern here would very easily subscribe to this service and forget to cancel after 3 months. The actual primary action that the user would expect, which is simply to download the video, is disguised as a secondary button with confirm-shaming language that aims to make the user feel guilty for not accepting the free service being offered. This tactic combines three dark patterns known as visual interference, confirmshaming, and the roach motel pattern. There are many more of these dark patterns (see image below) that can be all too common on sites and in digital products we use every day.

Figure 3: Common Dark UX Patterns

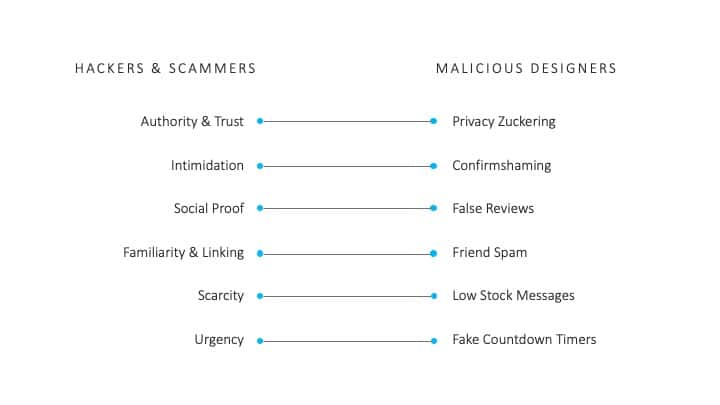

Even amongst these few examples provided, you can start to see how the underlying intentions behind these attack tactics and design patterns are similar. Below you can see how some of these connections can be made and compared. In the social proof and false reviews example, a social engineering hacker may attempt to create a false association between themselves and someone the victim knows personally in order to build trust. In the false review dark pattern, the same thing can happen when browsing e-commerce sites or on social networking sites. Malicious designers can take advantage of our susceptibility to social proof and peer pressure in order to make certain actions more appealing.

Figure 4: Connections

This social engineering lens on dark UX patterns emphasizes the responsibility of designers to incorporate ethical design principles into their practices and processes. The technology we use every day, whether it is an enterprise tool or a social media platform, is powerful and has a huge impact on our lives. We are inextricably linked with the patterns and interactions we use daily. Because of that, designers have a moral responsibility to respect this connection and create digital experiences that guide and enable desired outcomes, rather than manipulate them to bolster user metrics.

At ThreatQuotient, this is one of the core beliefs our UX design team applies in our design practice. We engage in hands-on user research activities, rapid wireframe prototyping and create high-fidelity interfaces with ethical design in mind. Our goal is to deliver ethical, user-centered solutions that empower security analysts and SOCs to make key decisions on data that is relevant, digestible, and actionable.

To learn more about ThreatQuotient and see the ThreatQ Platform in action, please visit: https://www.threatq.com/threatq-online-experience-registration/

Sources:

6 social engineering principles: https://cyberkrafttraining.com/2021/01/21/social-engineering-principles/

Dark UX Patterns: https://www.deceptive.design/

0 Comments