Power the SOC of the Future with the DataLinq Engine – Part 3

LEON WARDIn my first blog in this three-part series, we discussed the importance of data to the modern SOC, and the unique approach of ThreatQ DataLinq Engine to connect the dots across all data sources, tools and teams to accelerate detection, investigation and response. In part two, we explored how the DataLinq Engine enables SOCs to strategically use and operationalize data across a five-stage process, beginning with Ingest (gaining access to input data – structured and unstructured via Marketplace apps and an open API) and Normalize (automatically translating data from different sources, formats and languages into a single object).

In this final blog of the series, we’ll cover the remaining three stages in the DataLinq Engine processing pipeline: Correlate, Prioritize and Translate.

Correlate

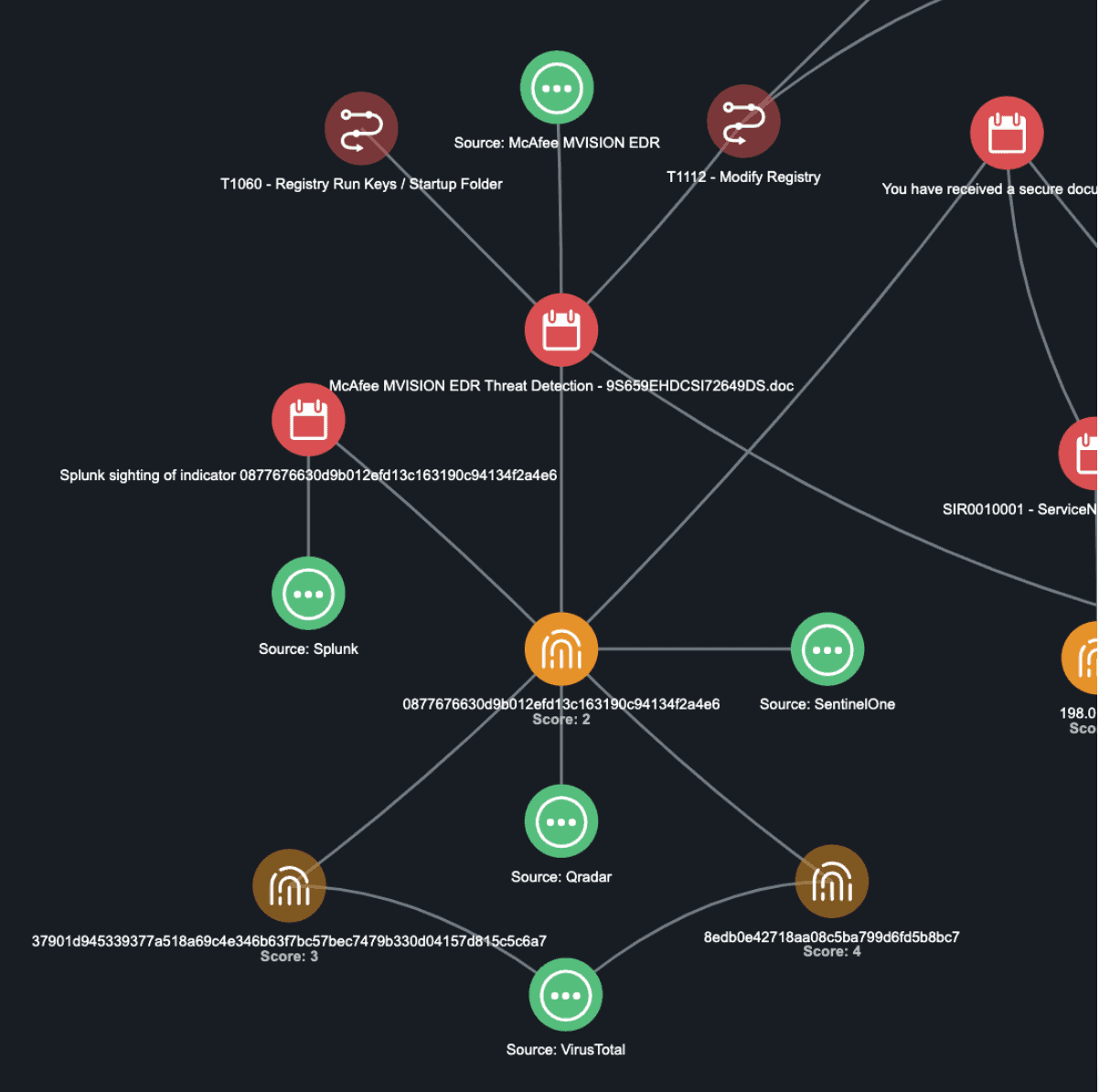

By ensuring a solid foundation of object values, we can also use it for automatically or manually gathering supporting information about an object from integrated products. For example, a file hash alone may involve:

- EDR: Does this file hash appear on any endpoints, and what are they?

- AV/SIEM: Are any security events reported about this hash?

- Sandbox: Are there malware analysis reports, and what were the results?

- Incident / Ticketing system: Has this hash been reported as an artifact associated with a breach/incident in the past?

- Intelligence: What adversaries, campaigns or malware families has this hash been attributed to, and what were the goals, motivations and methods behind them? (Including mappings to MITRE ATT&CK TTPs)

This is just the beginning of the detection and response investigation process. Consider the other objects that exist within our single event, and the benefits realized by quickly answering key questions by pulling from across the deployed defenses:

- Who is this identity?

- What are the methods behind the malware family detected?

- What’s the history of that URL, or domain?

- What additional context can be pulled in to validate if the C2 traffic detection is valid?

This is where correlation comes in. As more data and context is learned, the engine consistently updates the object records which also links it to additional sources, events and tools. This correlation into a single record is essential to enable an assessment of priority or relevance on an object, not only when it’s first seen, but continuously throughout its lifespan as additional information is learned about it based on the sum of all information known.

Figure 1. A visual representation of security events, broken down into objects and then presented as a correlated whole

Prioritize

Though the prioritization of data objects is automated, it is under the control of the teams using ThreatQ. Flexibility in how data is scored is key to ensure that the right threat objects are identified allowing protections to be exported automatically to the right tools. Getting the right data, to the right tools and at the right time, for the right organization has always been the goal. Once the score of an object passes a threshold, it may trigger the auto deployment of mitigations, additional analysis or responses.

Common questions addressed when prioritizing data include:

- Is this IP address related to an active campaign we’re tracking?

- Is this malware known to exploit a vulnerability we’re exposed to?

- Is the actor behind this attack known to target organizations in our vertical?

Elements like these, help to ensure the right objects are prioritized for action. However, before taking action, additional translation back into the appropriate language and format is needed.

Translate

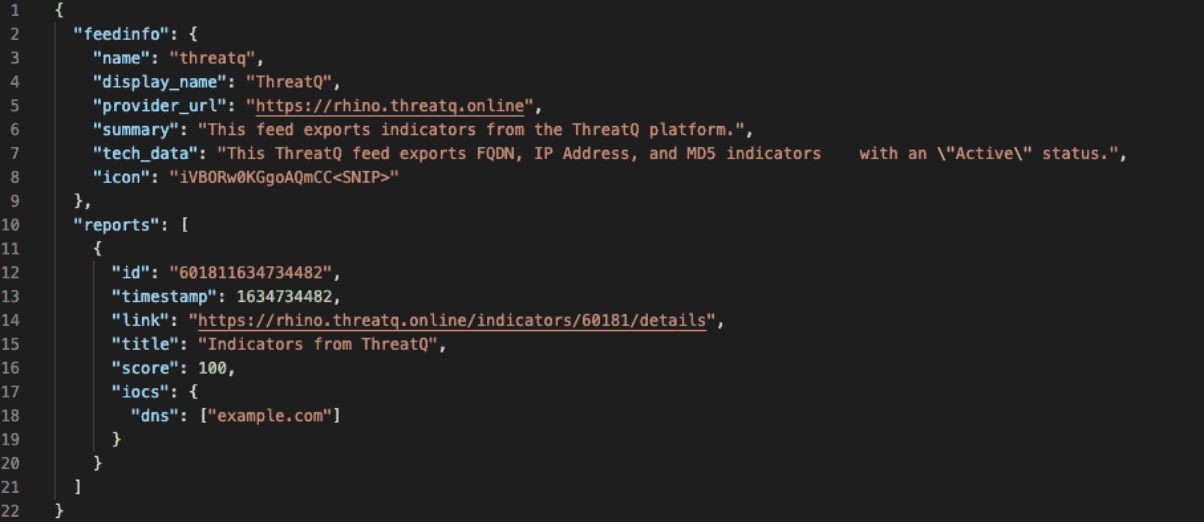

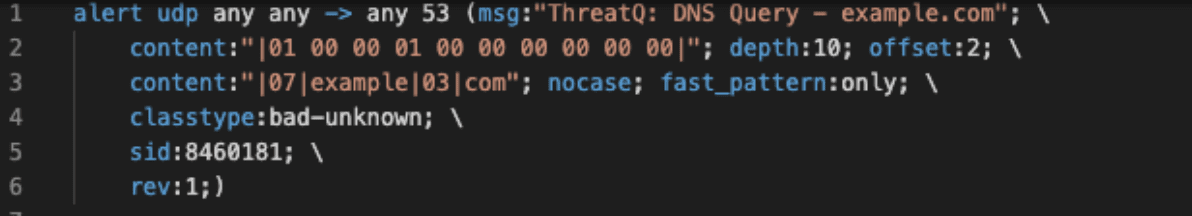

The translation approach and process vary depending on the type of actions to be taken against what form of external service or product. Consider the detection of a malicious FQDN: evil.example.com. In order to have different tools take action on it, the detection data may need to be defined in very different languages:

Figure 2. VMWare Carbon Black

Figure 3. Cisco FirePOWER (Snort) language

Being able to automatically generate sets of detection data for consumption by detection and prevention tools, focused on the use case of that tool, but with data that is prioritized to ensure that resources are used intelligently is now possible because of the DataLinq Engine.

The feedback loop

Priorities, threats, campaigns and vulnerabilities are forever changing. To be dependable any dataset must be able to take this into account. The DataLinq Engine supports a feedback loop where it consumes context that is detected from those tools that have detection content deployed into them in addition to systems that are providing enrichment services. This feedback loop enables additional context to be learned over time as sightings can provide almost immediate updates to prioritization and scoring data.

Take the next step to a data-driven approach to security

Security-forward teams are adopting a data-driven approach supported by an open integration architecture and balanced use of automation when dealing with the evolving nature of attacks. This approach best equips SOC teams to “connect the dots” across all data sources, tools and teams to accelerate threat detection and response across the entire organization.

ThreatQ DataLinq Engine enables a data-driven approach across five key stages including ingestion, normalization, correlation, prioritization and translation. Finally, SOC teams have an efficient and effective way to make sense out of data and operationalize it where required.

For all the details on ThreatQ DataLinq Engine, download your copy of Accelerate Threat Detection & Response with DataLinq Engine. Want to take the next step? Request a demo/Experience ThreatQ now.

0 Comments